Pittsburgh-based Ansys announced it will collaborate with Sony Semiconductor Solutions Corporation to test perception in autonomous vehicle sensors.

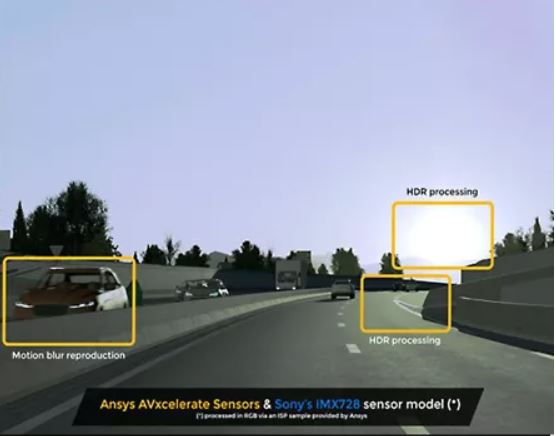

Using the Ansys AVxcelerate Sensors, the companies will test multispectral camera simulations for scenario-based perception, the companies said. The tests will take advantage of the AVxcelerate Sensors and Sony’s high dynamic range (HDR) Image Sensor Model to test advanced driver assistance systems and AV functions. The tests will allow the companies to evaluate the sensors in different light scenarios and weather conditions including rain, snow and fog.

Autonomous vehicles (AVs) and Advanced Driver Assistance Systems (ADAS) rely on camera, radar, and LiDAR sensor-based perception systems to evaluate the conditions around the vehicles to make navigational decisions. Without the ability to validate these systems, manufacturers and supplies risk increased safety issues, more regulatory challenges, and reduced trust, officials said. The two companies said their testing using high-fidelity simulations would improve performance, reduce risk and accelerate development times.

“Achieving full autonomy involves OEMs working with leading technology providers like Ansys to enhance the accuracy of the integrated tools used to validate AV systems,” said Tomoki Seita, general manager, automotive business division, Sony Semiconductor Solutions Corporation. “Through this collaboration, customers can confidently verify their systems using highly reproducible, predictively accurate simulations. This is especially useful for OEMs and Tier 1 suppliers that run actual camera simulations to verify recognition algorithms and vehicle control software.”

Ansys officials said the AVxcelerate Sensors platform is able to generate a virtual environment with varied lighting, weather and material conditions to simulate how light travels through an environment, the camera lens and then the imager. When added to Sony’s sensor model, officials said, the simulation can reproduce the pixel characteristics, signal processing functions and system functions with predictive accuracy. The simulation model allows users to test in different scenarios which will improve accuracy, reliability and safety in ADAS and AV applications, the companies said.